As organizations modernize their data platforms, serverless execution models are increasingly positioned as the default choice, promising elasticity, simplified operations, and optimized costs. In practice, however, enterprise data ecosystems often involve a diverse set of integrations that challenge a purely serverless approach.

During a recent assignment for a leading US Healthcare organization, we encountered this situation while optimizing a Databricks-based data platform. The platform supported multiple jobs ingesting data from external systems, including SQL Server databases, Kafka topics, and web services, followed by a series of downstream transformation and analytics workloads.

While workloads executed reliably on dedicated Databricks compute, moving the same jobs to serverless compute resulted in intermittent failures primarily related to connectivity, authentication, and access. These failures were sporadic in nature, making them difficult to predict and troubleshoot, and raised concerns around platform stability for production-grade pipelines.

While workloads executed reliably on dedicated Databricks compute, moving the same jobs to serverless compute resulted in intermittent failures—primarily related to connectivity, authentication, and access. These failures were sporadic in nature, making them difficult to predict and troubleshoot, and raised concerns around platform stability for production-grade pipelines.

Understanding the Challenge

A deeper analysis revealed that the issue was not related to application logic or data volumes, but rather to the differences in execution, networking, and identity models between dedicated and serverless compute.

Serverless compute operates in a fully Databricks-managed, ephemeral runtime environment. Executors are short-lived, networking is abstracted, and outbound traffic originates from shared and rotating IP ranges. Identity and access rely on short-lived tokens scoped to the execution context. While this model is highly effective for stateless processing, it introduces challenges when workloads depend on:

- Private or on-premise network connectivity

- Static IP allowlists

- Long-lived JDBC or Kafka connections

- Legacy or certificate-based authentication mechanisms

Dedicated compute, in contrast, provides stable networking, predictable identity behavior, and tighter integration with customer-managed infrastructure. This is often a necessity in regulated healthcare environments.

Solution Approaches Evaluated

To address these challenges, multiple architectural options were evaluated in detail:

1. Moving the entire workload on Dedicated Compute

This approach involved running all ingestion, transformation, and analytics workloads on dedicated job clusters. From a technical standpoint, this ensured:

- Consistent network paths to external systems

- Reliable authentication and connection reuse

- Minimal changes to existing ingestion logic

However, this model introduced inefficiencies:

- Transformation jobs required clusters to be provisioned and kept alive even when idle

- Autoscaling needed careful tuning to balance performance and cost

- Operational overhead increased due to cluster lifecycle management

For workloads that were inherently short-running and stateless, this approach resulted in underutilized resources and higher overall costs.

2. Refactoring External Integrations for Full Serverless Compatibility

This option focused on modifying upstream systems and access patterns to better align with serverless constraints. From a technical perspective, this would have required:

- Exposing databases and services via public endpoints

- Eliminating IP-based access controls

- Migrating to token-based or OAuth authentication

- Replacing legacy Kafka deployments with cloud-native alternatives

While feasible in theory, this approach was not practical due to:

- Security and compliance constraints specific to healthcare data

- Dependencies on existing enterprise infrastructure

- The scope and risk associated with re-architecting multiple external systems

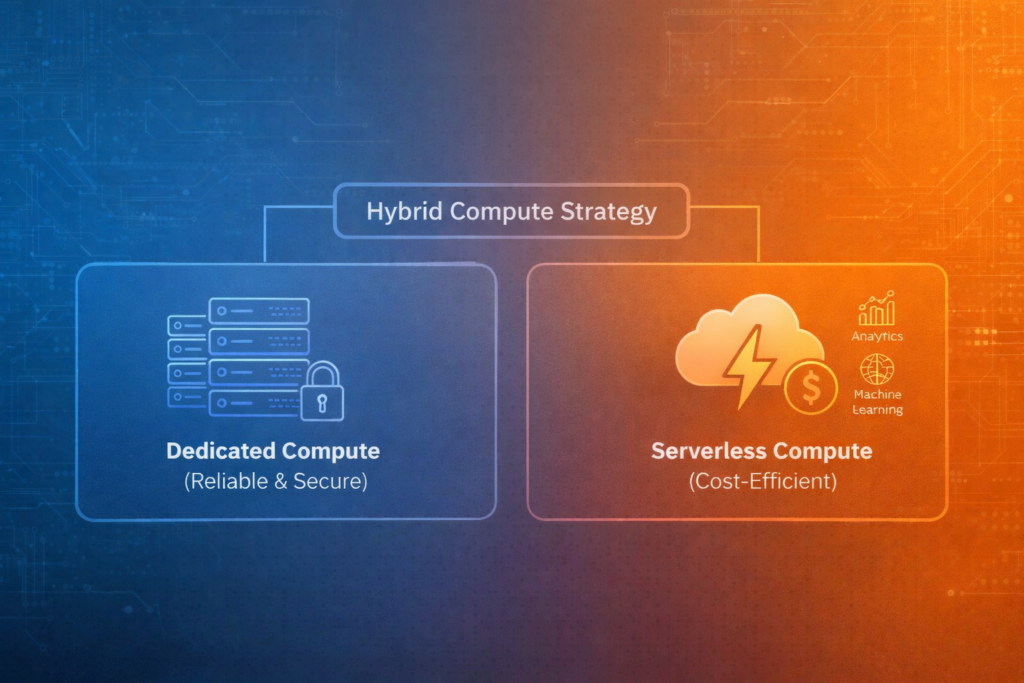

3. Introducing a Hybrid Compute Strategy (Selected Approach)

The third option focused on aligning compute types with workload characteristics, rather than enforcing a single execution model. This approach allowed each class of workload to run on the compute environment best suited to its technical requirements.

Solution Implementation

The final solution implemented a hybrid Databricks compute architecture, explicitly separating external ingestion from internal data processing, while maintaining a unified orchestration model.

1. Dedicated Compute for External Ingestion

All tasks responsible for interacting with external systems were executed on dedicated job clusters. These tasks included:

- JDBC-based ingestion from SQL Server databases hosted in private networks

- Kafka consumers requiring stable consumer group membership and offset management

- API integrations dependent on firewall rules, allowlists, or client certificates

Dedicated compute provided:

- Stable outbound IPs and predictable routing

- Compatibility with private endpoints, VPNs, and enterprise firewalls

- Support for long-lived connections and stateful clients

- Reduced risk of transient connectivity and authentication failures

These ingestion tasks wrote data to Delta tables in cloud object storage, establishing a durable and reliable handoff point between compute environments.

2. Serverless Compute for Transformations and Analytics

All downstream processing was executed using serverless compute, including:

- Data standardization and cleansing

- Business rule application

- Aggregations and dimensional modelling

- Preparation of consumption-ready datasets

From a technical standpoint, these workloads:

- Did not require direct access to external systems

- Were stateless and idempotent

- Relied exclusively on Delta Lake as the system of record

Serverless compute enabled:

- Rapid startup with no cluster provisioning delays

- Automatic scaling based on workload demand

- Elimination of idle compute between job runs

- Simplified operational management

Each task operated independently, with data exchange handled through persistent storage rather than in-memory or cluster-local dependencies, ensuring clean separation and fault isolation.

Cost Considerations and Outcomes

Although serverless compute has a higher per-unit cost, it proved to be more economical in this specific context. Transformation workloads were short-lived and executed on a scheduled or event-driven basis, making them poor candidates for always-on or long-running clusters.

By eliminating idle time and operational overhead, serverless compute reduced the total cost of ownership for downstream processing while maintaining performance and scalability.

Key Learnings and Closing Perspective

This engagement highlighted several important architectural insights:

- Serverless compute is best suited for stateless, bursty, and cloud-native workloads

- Dedicated compute remains critical for external ingestion and private connectivity

- A hybrid compute model provides a pragmatic balance between reliability, security, and cost

- Cost optimization is most effective when driven by workload behaviour, not compute type alone

By adopting this hybrid approach, the organization successfully modernized its Databricks platform—retaining reliability for mission-critical ingestion while leveraging serverless compute to achieve measurable cost and operational efficiencies. For complex enterprise data platforms, particularly in regulated healthcare environments, this strategy offers a scalable and practical path forward.